Introduction

What is Data Shaper Server?

The Data Shaper Server is an enterprise runtime, monitoring and automation platform. It is a Java application built to J2EE standards with HTTP and SOAP Web Services APIs providing an additional automation control for integration into existing application portfolios and processes.

The Data Shaper Server provides necessary tools to deploy, monitor, schedule, integrate and automate data integration processes in large scale and complex projects. The Data Shaper Server supports a wide range of application servers: Apache Tomcat, VMware tc Server and Red Hat JBoss Web Server.

The Data Shaper Server simplifies the process of:

To learn more about the architecture of the Data Shaper Server, see the Data Shaper Server Architecture section here below.

Data Shaper Server Architecture

The Data Shaper Server is a Java application distributed as a web application archive (.war) for an easy deployment on various application servers. It is compatible with Windows and Unix-like operating systems.

The Data Shaper Server requires the Java Development Kit (JDK) to run. We do not recommend using Java Runtime Environment (JRE) only, since the compilation of some transformations requires the JDK to function properly.

The Server requires space on the file system to store persistent data (transformation graphs) and temporary data (temporary files, debugging data, etc.). It also requires an external relational database to save run records, permission, users' data, etc.

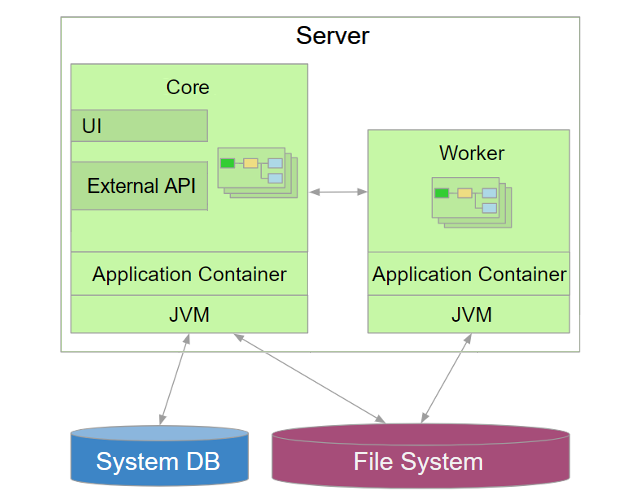

The Data Shaper Server architecture consists of the Core and the Worker.

Data Shaper Core

The Data Shaper Server's Core checks permissions and monitors the UI. For more information, see the Data Shaper Core section below.

Data Shaper Worker

The Worker is a separate process that executes graphs. The purpose of the Worker is to provide a sandboxed execution environment. For more information, see the Data Shaper Worker section below.

Dependencies on External Services

The Server requires a database to store its configuration, user accounts, execution history, etc. It comes bundled with an Apache Derby database to ease the evaluation. To use the Data Shaper Server in a production environment, a relational database is needed.

The Server needs a connection to a SMTP server to be able to send you notification emails.

Users and groups' data can be stored in the database or be read from an LDAP server.

Server Core - Worker Communication

The Server Core receives the Worker’s stdout and stderr. The processes communicate via TCP connections.

Data Shaper Core

The Data Shaper Core is the central point of the Data Shaper Server. It manages and monitors Workers that run the jobs.

The Data Shaper Core is the visible part of the Server with a web-based user interface.

The Data Shaper Core connects to the system database and stores its configuration and service records in it. The system database is required. If it is configured, the Core connects to an SMTP server to send notification emails or to an LDAP server to authenticate users against an existing LDAP database.

Data Shaper Worker

The Worker is a standalone JVM running separately from the Server Core. This provides an isolation of the Server Core from executed jobs (e.g. graphs). Therefore, an issue caused by a job in the Worker will not affect the Server Core.

The Worker does not require any additional installation - it is started and managed by the Server. The Worker runs on the same host as the Server Core, i.e. it is not used for parallel or distributed processes. In the Cluster, each node has its own Worker.

The Worker is a relatively light-weight and simple executor of jobs. It handles job execution requests from the Server Core, but does not perform any high-level job management. It communicates with the Server Core via an API for more complex activities, e.g. to request execution of other jobs, check file permissions, etc.

Configuration

General Configuration

The Worker is started by the Server Core as a standalone JVM process. These default configurations of the Worker can be changed in the Setup:

- Heap memory limits

- Port ranges

- Additional command line arguments

The settings are stored in the usual Server configuration file. The Worker is configured via special configuration properties.

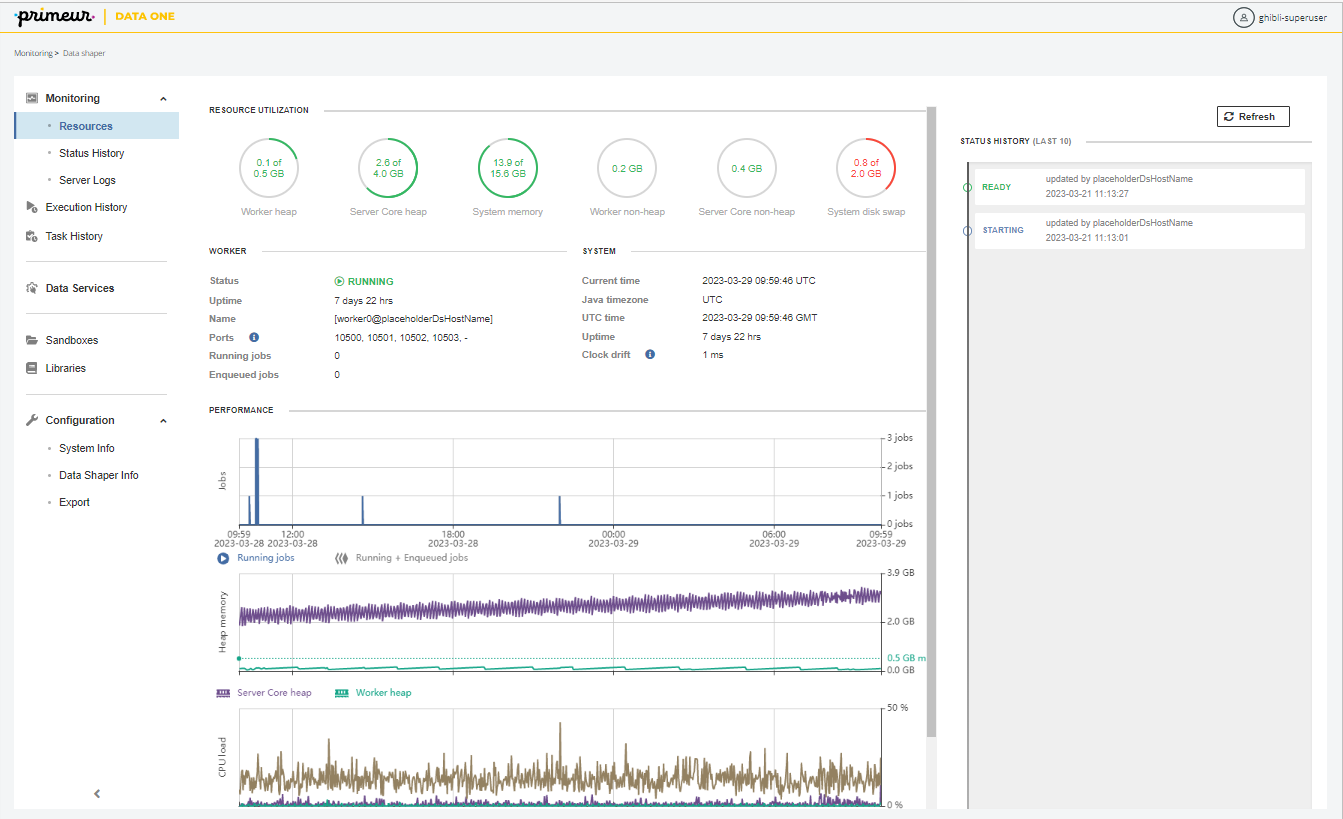

A full command line of the Worker is available in the Monitoring section.

Cluster specific configuration

The Cluster should use a single portRange: all nodes should have identical values of the portRange. That is the preferred configuration, although different ranges for individual nodes are possible.

Management

The Server manages the runtime of the Worker, i.e. it is able to start, stop, restart the Worker, etc. Users don’t need to manually install and start the Worker.

The status of the Worker and its actions are available in the Monitoring Worker section.

Job Execution

By default, all jobs are executed in the Worker; yet the Server Core still keeps the capability to execute jobs. It is possible to set specific jobs or whole sandboxes to run in the Server Core via the worker_execution property on the job or sandbox. It is also possible to disable the Worker completely, in which case all jobs will be executed in the Server Core.

Executing jobs in the Server Core should be an exception. To see where the job was executed, look in the run details in Execution History > Executor field. Jobs started in the Worker also log a message in their log, e.g. Job is executed on Worker:[worker0@node01:10500].

Job Configuration

The following areas of the Worker configuration affect job executions:

- JNDI

Graphs running in the Worker cannot use JNDI as defined in the application container of the Server Core, because the Worker is a separate JVM process. The Worker provides its own JNDI configuration. - Classpath

The classpath is not shared between the Server Core and the Worker.

Data Shaper Cluster

The Data Shaper Cluster allows multiple instances of the Data Shaper Server to run on different hardware nodes and form a computer Cluster. In this distributed environment, data transfer between Data Shaper Server instances is performed by Remote Edges.

The Data Shaper Cluster offers several advantages for Big Data processing:

- High Availability - All nodes are virtually equal; therefore, almost all request can be processed by any Cluster node. This means that if one node is disabled, another node can substitute it.

To achieve high availability, it is recommended to use an independent HTTP load balancer. - Scalability - It allows for increased performance by adding more nodes.

There are two independent levels of scalability implemented: scalability of transformation requests and data scalability.

For general information about the Data Shaper Cluster, see Data Partitioning (Parallel Running) and Data Partitioning in Cluster.

In Cluster environment, you can use several types of sandboxes, see Sandboxes in Cluster.

Updated about 1 year ago