Workflows hacks you may need

Handling process variables

During the modeling process, you may need to check a variable value - RC variables is the sharpest example - or to access variable values when a variable is not a plain String but a "Complex" type with methods (i.e. FileDetail variable type when triggering an instance with NewFile trigger).

Process variables are persisted entities that will live inside any process instance identified by a name and a value.

Whenever a process instance starts, the variables requested when the Contract was created come to life being assigned with their value. All the variables declared in any single service task will come to life during the execution, when it hits the service task and is executed without errors.

The way to get a variable value is to surround it with ${variableName}, if it is a plain variable.

If it is a complex object like Process Variable Objects it will contain multiple values that can be accessed by the relative getter method: i.e. ${file.getRegistryId()}.

If you need to check a variable value, in the case of an exclusive gateway, the condition has to be in the parenthesis: ${variableValue == 0} or ${file.getRegistryId() == 12}.

When testing a process variable value in a conditional execution, keep in mind all possible scenarios when implementing the condition. If you design a conditional flow with something like: ${variableValue.equals("go here")}, you are actually assuming that the variable exists, and that it is not null. Any condition should consider first of all that a variable exists, then that it is not null, and then if it has or not a specific value.

A statement like this:

${execution.getVariable("variableValue") != null && variableValue != null && variableValue.equals("go here")} will verify that variableValue exists, that its value is not null, and that it has a value that matches the "go here" string.

Error Management

In PRIMEUR Data One, no error management is required.

The only exception is when the user explicitly wants the template to behave in a different way according to a return code, or intentionally ignoring a potential service task exception.

Remote failures on transports and commands are treated forcefully failing the instance itself!

The instance can then be RESTARTED (the error instance becomes aborted and a new one will start from the beginning with the same set of variables and properties) or the single service task can be executed again with the RETRY (in this case only the service task that failed is scheduled, anything preceding it will not be re-executed).

Buddie the Geek to ground control:

Anytime you are on an error boundary event branch, no variable defined in the service task that has just failed is available since... it has failed! In all ServiceTasks that are failing internally (and modeled with "Throw Exception" property enabled), only 2 process variables are guaranteed in the Error Boundary Event used to manage the exception:

Hint!

- ${ExceptionMessage}: containing a descriptive message of the exception

- ${ExceptionStackTrace}: containing the full stack trace of the exception (debugging purposes)

Don't forget to set conditions on every branch coming out of an exclusive gateway.

Warning!Any triggerable service task that gets a schedule RC which is not 0 will throw an exception! This because otherwise it would wait for an event that will never come since the schedule has failed. Therefore, always mark your triggerable service tasks with throw exception and an error boundary event for proper management.

Remember!

General modeling rules

In PRIMEUR Data One, VirtualBoxes can be seen as "labels" applied to a Fileset.

They can be applied or removed with the VirtualBox bind and unbind Service Tasks and files having a specific VBox (VirtualBox LS service task) can be listed.

Moreover, templates that are triggered with VirtualBox Bind and Unbind operations can be created, so that instances can start from a Contract whenever another instance binds or unbinds that specific virtual box to a Fileset.

This is far more elegant and cleaner than a republish to trigger new instances/contracts!

When you're modeling a template, configuring a service task, consider only the properties that are available in the "More" section on the right. You can ignore all the rest.

During the first versions of a template, place any logs you need for debugging purposes but remember to remove them when it is stable and running properly, ready for production usage.

Those service tasks are consuming resources to produce data that you can get from the "Workflow Instances" monitor section as well.

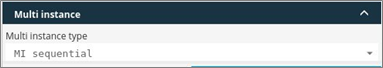

If you have used an SpLs like service task that has been executed, you'll find a resulting object which is a variable containing a List of ExternalFile objects, that you will cycle through with a SubProcess shape. That subprocess is the only shape where you configure its "Multi instance" properties: Remember to use MI sequential to avoid unexpected behaviors.

Keep in mind!

The suggested shapes that do not contain the Primeur logo are not many. Stick to them since there is no apparent scenario where they are not enough to perform... anything! Here's the list of the good ones:

- Start event

- Subprocess

- Timer boundary event

- Error boundary event

- Exclusive gateway

- Parallel gateway

- Inclusive gateway

- End event

- Sequence flow

Updated 10 months ago