... run searches in Data Watcher 🚀

Simple Searches

Text Search

Enter text, such as a filename, in the Search edit box at the top of the page and click the lens button on the right to run your query.

The autocomplete function will provide suggestions while you type into the Search field. If a saved query matches your characters, it will appear as well.

If no results match your criteria, No results will appear in the drop-down list.

Search by Date

Entering a date, the system will propose you search from that day to the current day or up to the specified day:

gg/mm/yyyy[since]: this query will search for flows from the selected date until today. For example, entering 18/12/2024 [since] will retrieve all results from December 18th up to the current date.

gg/mm/yyyy[until]: this query will search for flows up to the selected date. For example, entering 20/12/2024 [until] will retrieve all results up to December 20th.

These options can be used together to search for flows in the interval specified, e.g. from December 18th, 2024 to December 20th, 2024 as shown below:

Search by Integrity Status

successfully[status]: this query will search for all flows having Integrity Status Completed.

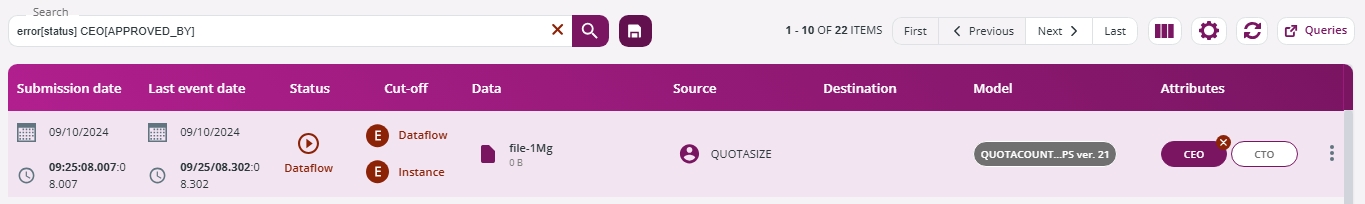

error[status]: this query will search for all flows having Integrity Status Error.

running[status]: this query will search for all flows having Integrity Status Running.

Search by DataFlow Status

The Business Status depends on the DataFlow status of cut-offs applied to the flow events:

onschedule[business]: This query will search for all flows with DataFlow Status On Schedule. They have been completed successfully and are on schedule.

pending[business]: this query will search for all flows with DataFlow Status Pending. They will be completed in the future.

warning[business]: this query will search for all flows that have a DataFlow Status Warning. They are still pending and near the deadline.

error[business]: This query will search for all flows that have a DataFlow Status Error. They are uncompleted or completed after the scheduled time.

Search by Instance Status

The Business Instance Status depends on the Instance status of cut-offs applied to the flow events:

onschedule[businessInstance]: This query will search for all flows with Instance Status On Schedule. They have been completed successfully and are on schedule.

pending[businessInstance]: this query will search for all flows with Instance Status Pending. They will be completed in the future.

warning[businessInstance]: this query will search for all flows that haveInstance Status Warning. They are still pending and near the deadline.

error[businessInstance]: this query will search for all flows that have an Instance Status Error. They are uncompleted or completed after the scheduled time.

For more details refer to the sections Dataflow Inquiry and Setting Cut-offs in Data Watcher.

Search by Source

Click the Source link, to drill down the results.

The Source will be added automatically to the Search field.

Search by Attribute

When you click an Attribute, it will be added automatically to the Search field. The administrator configures attributes, as described in Attributes. For example, in the figure below the CEO attribute has been added to the Search bar.

As an alternative, you can start typing an attribute in the Search field and the autocomplete function will propose the value starting with the typed letters.

Multiple search keys can be combined, separated by a space:

Advanced Searches: the Data Watcher Query Language

Data Watcher Query Language (DWQL) can be used in the Inquiry section search bar to run a search query string on a product-managed entity. This section covers the basics of DWQL as applicable to dataflow instance entities.

Language reference

Literals

Literals are constant values that a user specifies in a DWQL query, for instance, a filename "myfile.txt" is literal. Generally, literals containing blanks/spaces must be enclosed in double quotation marks, otherwise, each space-separated substring is considered a distinct literal on its own. DWQL supports typed literals with specific semantics as shown in the table below:

stringLiteral

invoices "Monthly Invoices" "monthly invoices"

stringLiterals are a simple sequence of characters that will be used for full string match in a case-insensitive fashion

csstringLiteral

invoices "Monthly Invoices" "monthly invoices"

csstringLiterals are a simple sequence of characters that will be used for full string match in a case-sensitive fashion

wcstringLiteral

'Los?Angeles' 'Los *geles'

wcstringLiterals are a simple sequence of characters and "?" / "*" wildcards metacharacters that will be used for case insensitive string match honoring "?" / "*" wildcards. The single quote character, the question mark, the asterisk, and the backslash appearing in the input string as payload must be escaped with a backslash, i.e.: \' \? \* \\

stemstringLiteral

stemstringLiterals, are a simple sequence of characters that will be used for full string match, in a case insensitive fashion and a diacritic insensitive fashion (e.g. "perche" matches "Perché" and "wurstel" matches "Würstel")

wcstemstringLiteral

wcstemstringLiterals, are a simple sequence of characters and wildcards (see also wcstringLiteral definition) that will be used for full string match, in a case insensitive fashion and a diacritic insensitive fashion (e.g. "perche" matches "Perché" and "wurstel" matches "Würstel").

stemprefixstringLiteral

stemprefixstringLiterals, are a simple sequence of characters that will be used for full string and prefix string match, in a case insensitive fashion and in a diacritic insensitive fashion (e.g. "perche" matches "Perché sì" and "wurstel" matches "Würstel Weiner").

wcstemprefixstringLiteral

wcstemprefixstringLiterals, are a simple sequence of characters and wildcards (see also wcstringLiteral definition) that will be used for full string and prefix string match, in a case insensitive fashion and in a diacritic insensitive fashion (e.g. "perche" matches "Perché sì" and "wurstel" matches "Würstel Weiner")

timestampLiteral

01/12/2018 "01/12/2018 12:28" "01/12/2018 12:28:42" "01/12/2018 12:28:42 +0100"

timestampLiterals express a date and a time in the form "dd/mm/yyyy hh:mm:ss ±hh:mm". The time of the day can be specified with or without seconds. In time ranges, 00 seconds are assumed by default for the lower boundary and 59 for the upper boundary. The time of the day can be completely omitted. In time ranges, by default 00:00:00 time is assumed for the lower boundary and 23:59:59 for the upper boundary. Time zone specifications can be omitted. By default, the client timezone is used, if available, otherwise the server time zone is used

timeoffsetLiteral

"last 7 days" "last 6 hours" "last 42 minutes"

Time offset literals express a time duration in the form "last number unitOfMeasure" where unitOfMeasure is one of "days", "hours" or "minutes"

Clauses

Free text search clause A free text search clause is simply a stemStringLiteral or wcstringLiteral that will be used to match any indexed field of dataflow instances.

stemprefixstringLiteral

"my file.txt"

For example, the simple DQQL query "myfile.txt" will match all dataflow instances having an indexed attribute with the value "myfile.txt" or starting with "myfile.txt" (e.g.: "myFile.TXT.previousversion.zip").

wcstemprefixstringLiteral

'my?file.txt'

For example, the simple DQQL query 'my?file.txt' will match all dataflow instances having an indexed attribute with the value "my file.txt" and also those starting with a value matching 'my?file.txt'.

Semantic search clauses Semantic search clauses match specific attributes of dataflow instances and are expressed in the form of literal[searchScope]. Supported semantic search clauses are listed in the table below:

stringLiteral[status]

running[status] successfully[status] error[status]

The status clause search scope matches the physical status value of a dataflow instance specified in the literal; allowed values are: - running - successfully - warning - error

stringLiteral[business]

pending[business] warning[business] onschedule[business] error[business] onschedule-after-cutoff[business]

The business clause search scope matches the business status value of a dataflow instance specified in the literal; allowed values are: - pending - warning - onschedule - error - onschedule-after-cutoff Only dataflow instances associated to a dataflow model and a cutoff definition have a non null business status.

stemstringLiteral[entity] wcstemstringLiteral[entity]

QMGR01[entity] 'dailyreport_2022-08-??'[entity]

The entity clause search scope matches all dataflow instances that have the specified stemstringLiteral / wcstemstringLiteral as a as an data entity, e.g. a file, managed by that dataflow instance (as an input, as an output or in an intermediate stage of the processing).

stemstringLiteral[system] wcstemstringLiteral[system]

QMGR01[system] 'data*'[system]

The system clause search scope matches all dataflow instances that have the specified stemstringLiteral / wcstemstringLiteral as a monitored system.

stemstringLiteral[source] wcstemstringLiteral[source]

QMGR01[source] 'data*'[source]

The source clause search scope matches all dataflow instances that have the specified stemstringLiteral / wcstemstringLiteral as a source of information. Source systems may or may not be monitored system, in some cases their existence is simply detected from a monitored system when it interacts with a remote non-monitored source system.

stemstringLiteral[destination] wcstemstringLiteral[destination]

QMGR01[destination] 'data*'[destination]

The destination clause search scope matches all dataflow instances that have the specified stemstringLiteral / wcstemstringLiteral as a destination of information. Destination systems may or may not be monitored system, in some cases their existence is simply detected from a monitored system when it interacts with a remote non-monitored destination system.

stemstringLiteral[model] wcstemstringLiteral[model]

"SFO Invoices"[model] "SFO*"[model]

The model clause search scope matches all dataflow instances that have a model named as the specified stemstringLiteral / wcstemstringLiteral.

timestampLiteral[since]

01/12/2018[since] "01/12/2018 12:28"[since] "01/12/2018 12:28:42"[since] "01/12/2018 12:28:42 +0100"[since]

The since clause search scope matches all dataflow instances that have finished at or after the specified timestampLiteral.

timestampLiteral[until]

01/12/2018[until] "01/12/2018 12:28"[until] "01/12/2018 12:28:42"[until] "01/12/2018 12:28:42 +0100"[until]

The until search scope matches all dataflow instances that have started at or after the specified timestampLiteral.

timeoffsetLiteral[time]

"last 7 days"[time] "last 6 hours"[time] "last 42 minutes"[time]

The time clause search scope matches all dataflow instances that have finished in the previous timeoffsetLiteral days/hours/minutes from the time the DWQL query is executed.

stringLiteral[match]

has-dataflow[match] without-model[match] with-model[match]

Filters on dataflow instance specific stringLiteral metadata; allowed values are: - has-dataflow: matches all dataflow instances of a Data One native dataflow - with-model[match]: matches all dataflow instances having a model associated - without-model[match]: matches all dataflow instances having not having a model associated.

stemstringLiteral[csstringLiteral] wcstemstringLiteral[csstringLiteral]

PDF[docType] secret[privacy] B4218[branchId] NYC[plantName] 'sales-2021*'[stats]

Data Watcher allows flow model enrichment with custom user-defined model attributes; when these attributes are defined, DWQL is automatically extended with a new search scope named as the attribute, for each available attribute. For instance, if a plantName attribute is defined, having values like "NYC", "LAX" and "SFO", you can match all instances matching a model that has plantName=NYC by specifying a NYC[plantName] clause. Unlike the previously discussed clause search scopes, these scopes are inherently dynamic as they depend on the availability of user-defined flow model attributes. Literal strings between square brackets must not be quoted using double quote chars, if attribute names contain blank chars they must be replaced with underscore, for example if there is an attribute named "Business Unit", the resulting search clause will look like "Research and develop"[Business_Unit].

stringLiteral[flowId]

5fc8e0cead92575f34d843b1[flowId]

Selection of a specific flow by its unique Data Watcher-generated internal id.

stemstringLiteral[dataflow] wcstemstringLiteral[dataflow]

"Daily Payroll"[dataflow] 73a4b441[dataflow]

The dataflow clause search scope matches all dataflow instances that have been natively modeled in as a Data One native dataflow and have stringLiteral as name or as an identifier, so "myname[dataflow]" query is equivalent to "(myname[dataflowName] OR myname[dataflowId)" query.

stringLiteral[dataflowId]

66ff3d37-bfcb-47e6-a1b2-63b50d1f9572[dataflowId]

Selection of a specific flow by its unique Data Watcher-generated internal ID (a.k.a. DFIID).

Query option clauses DWQL language allows for query options, which influence how the query is executed, rather than what the query returns. From a syntax point of view, a query option is a special search clause having a semantic search scope prefixed by "opt-" that appears top level (i.e. not nested) in a query in implicit or explicit AND with other top level clauses.

stringLiteral[opt-ttlsecs]

180[opt-ttlsecs]

This query option limits the time allowed for the query to complete to stringLiteral seconds. When such time expires, the query is forcibly aborted, and an error is returned. For example, 180[opt-ttlsecs] sets such a limit to three minutes.

Logical operators and expression nesting

A DWQL query is an expression made of "AND" / "OR" logical operators, case sensitive, wired together with "(" / ")" balanced parentheses having DWQL clauses as operands. On top of the explicit "AND" operator, also the implicit "AND" operator is supported. This means that if a sequence of two or more clauses appears in a DWQQL query without any logical operator in between, an "AND" operator is assumed.

Considerations about performance

The execution time of a query is mainly influenced by two factors:

Size of the underlying database, i.e. the quantity of dataflow instances stored in the database

The computational complexity of the query itself

The complexity of the query is mainly influenced by two factors:

The number of documents that need to be scanned and returned

DWQL queries are designed to take advantage of indexes, but on very large databases even the scanning of an index can be costly

The filter clauses applied to string values

As a general rule, a full string match (i.e. stringLiteral and stemstringLiteral) or prefix match (i.e. stemprefixstringLiteral) can be much more performant than its counterpart allowing wildcards based pattern matching (i.e. wcstemstringLiteral and wcstemprefixstringLiteral)

Moreover, not all wildcard patterns have the same weight, as a general rule a pattern including multiple wildcards and/or including a wildcard at the beginning of the string can be very costly

Performance optimization tips:

Set a sensible retention policy for the data in your database

You can regularly run Data Watcher data cleaner command (mongodb-cleaner.sh) to implement your policy

Limit the number of returned documents by filtering them up-front

In most common Data Watcher scenarios, an effective way of restricting the number documents/indexes entries to be analyzed is to add sensible time based clauses to the query (e.g. "last 3 hours"[time])

Express your query with semantic search clauses instead of free text search clauses, when possible

As a general rule, semantic search clauses are underpinned by more selective indexes

Express filters on string values in the simplest form possible

Don't use wildcards if you don't need to

Express your wildcard filter in a simple form

Finally, if you really do need to run a complex query but the default time allowed for a query to complete it is not enough, you can allow for more time using the previously discussed [opt-ttlsecs] query options.

Examples

DWQL query: invoice Description: this query returns all dataflow instances having at least one value in its indexed field starting with "invoice".

DWQL query: invoice AND payroll Description: this query returns all dataflow instances having both "invoice" and "payroll" as value of an indexed field. This example gives the same results as the following one, which uses the AND operator implicitly.

DWQL query: invoice payroll Description: this query returns all dataflow instances having both "invoice" and "payroll" as value of an indexed field. Compared to the previous example, this example uses the AND operator implicitly.

DWQL query: 'MonthlySales-2021-??.txt'[entity] 180[opt-ttlsecs] Description: this query returns all dataflow instances that contain a file name conforming to the pattern 'MontlySales-2021-??.txt', and allows 3 minutes for the query to complete before aborting.

DWQL query: myfile1.txt AND mymodel1 Description: this query returns all dataflow instances having both myfile1.txt and mymodel1 as the value of an indexed field.

DWQL query: "SFO Invoices"[model] AND (warning[business] OR error[business]) AND "last 45 minutes"[time] Description: this query returns all dataflow instances associated with "SFO Invoices" model and having experienced a cut-off warning or a cut-off error in the last 45 minutes.

DWQL query: myfile1.txt AND error[status] AND "last 2 hours"[time] Description: this query returns all dataflow instances having myfile1.txt as the value of an indexed field that has experienced an error in the last two hours.

DWQL query: (ACMESERVER[system] OR ACMESERVER[source] OR ACMESERVER[destination] ) AND "last 2 hours"[time] Description: this query matches all dataflow instances terminated in the last 2 hours and involving "ACMESERVER" system in each possible role (monitored, non-monitored, source or destination).

Last updated