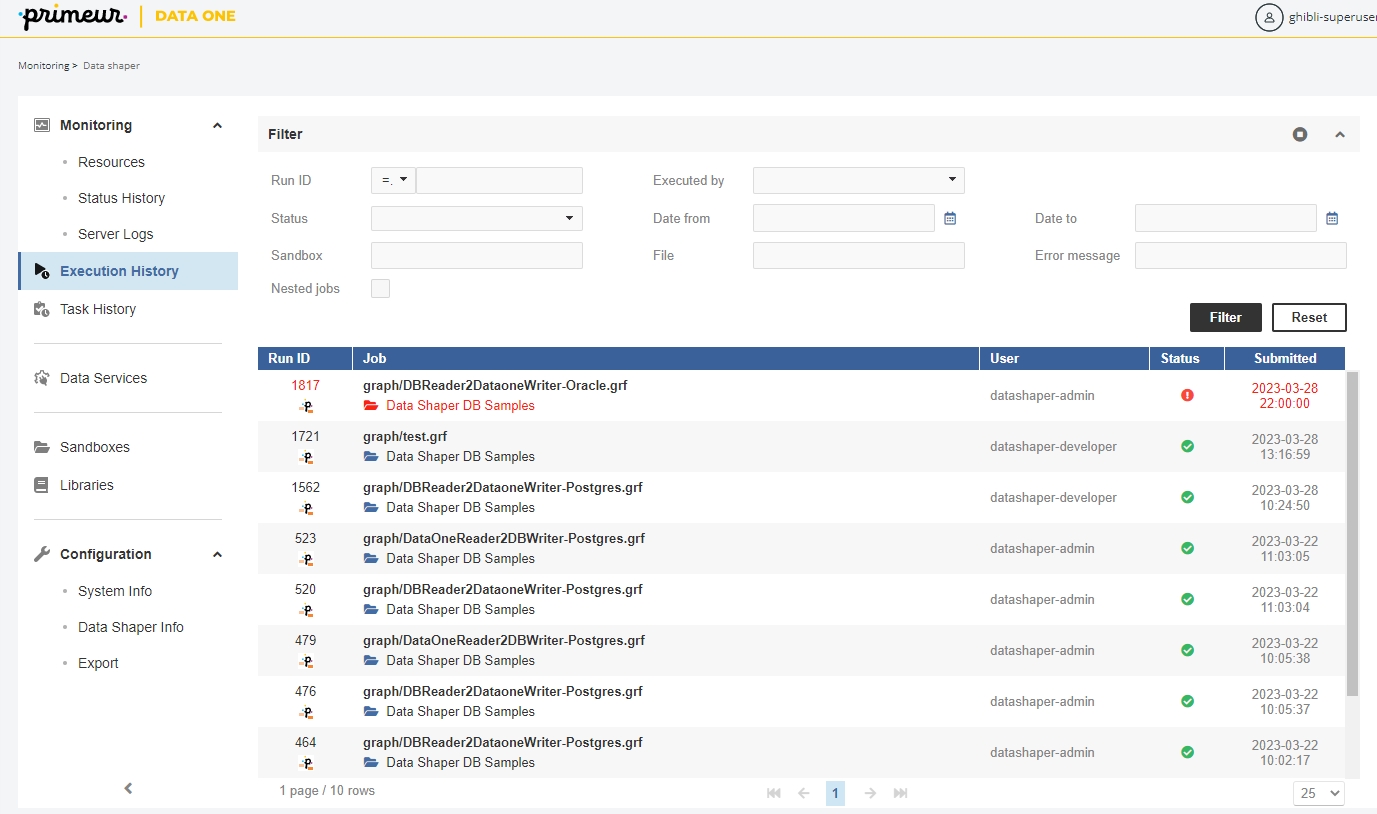

Execution History

Execution History shows the history of all jobs that the Server has executed. You can use it to find out why a job failed, see the parameters that were used for a specific run, and much more.

The table shows basic information about the job: Run ID, Node, Job file, Executed by, Status, and time of execution. After clicking on a row in the list, you can see additional details of the respective job, such as associated log files, parameter values, tracking and more.

Filtering and ordering

Use the Filter panel to filter the view. By default, only parent tasks are shown (Show executions children) – e.g. master nodes in a Cluster and their workers are hidden by default.

Use the up and down arrows in the table header to sort the list. By default, the latest job is listed first.

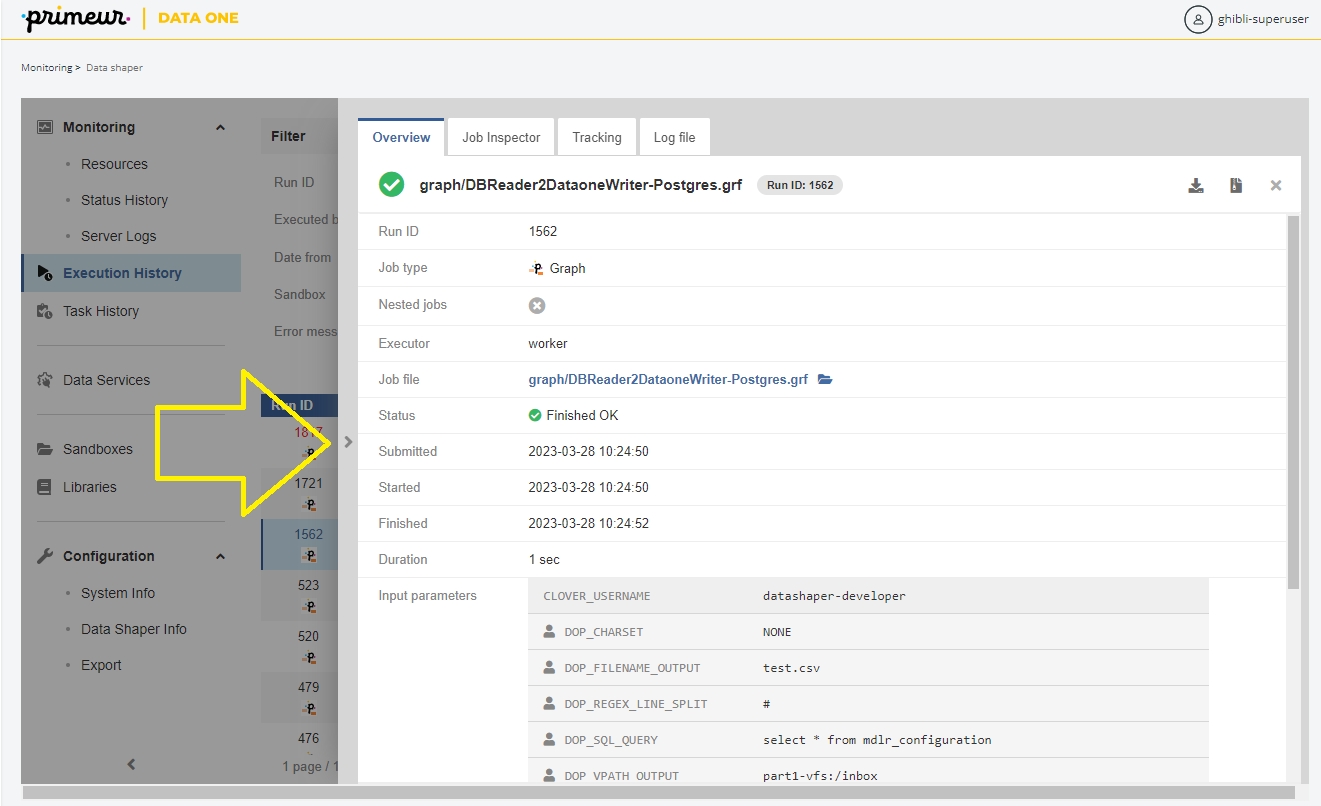

When some job execution is selected in the table, the detail info is shown on the right side.

Run ID

A unique number identifying the run of the job. Server APIs usually return this number as a simple response to the execution request. It is useful as a parameter of subsequent calls for specification of the job execution.

Job type

A type of a job as recognized by the Server. STANDALONE for graph, MASTER for the main record of partitioned execution in a Cluster, PARTITION_WORKER for the worker record of partitioned execution in a Cluster.

Nested jobs

Indication that this job execution has or has not any child execution.

Executor

If it runs on Worker, it contains the text "worker".

Executed by

The user who executed the job using some API/GUI.

Sandbox

The sandbox containing a job file. For jobs which are sent together with an execution request, so the job file doesn’t exist on the Server site, it is set to the "default" sandbox.

Job file

A path to a job file, relative to the sandbox root. For jobs which are sent together with an execution request, so the job file doesn’t exist on the Server site, it is set to generated string.

Status

Status of the job execution. ENQUEUED - waiting in job queue to start (see Job Queue), READY - preparing for execution start, RUNNING - processing the job, FINISHED OK - the job finished without any error, ABORTED - the job was aborted directly using some API/GUI, ERROR - the job failed, N/A (not available) - the server process died suddenly, so it couldn’t properly abort the jobs. After restart, the jobs with unknown status are set as N/A.

Submitted

Server date-time (and time zone) when the execution request arrived. The job can be enqueued before it starts, see Job Queue for more details.

Started

Server date-time (and time zone) of the execution start. If the job was enqueued, the Started time is the actual time that it was taken from the queue and started. See Job Queue for more details.

Finished

Server date-time (and time zone) of the execution finish.

Duration

Execution duration.

Failed component ID

If the job failed due the error in a component, this field contains the ID of the component.

Error message

If the job failed, this field contains the error description.

Exception

If the job failed, this field contains error stack trace.

Input parameters

A list of input parameters passed to the job. A job file can’t be cached, since the parameters are applied during loading from the job file. The job file isn’t cached, by default. Note: you can display whitespace characters in parameters' values by checking the Show white space characters option.

Hint!

By clicking the arrow on the left side of the detail pane (see the figure below), you can expand the detail pane for easier analysis of job logs.

Executions hierarchy may be rather complex, so it’s possible to filter the content of the tree by the fulltext filter. However when the filter is used, the selected executions aren’t hierarchically structured.

Job Inspector

The Job Inspector tab, contains viewing tool for selected job. See: Job Inspector.

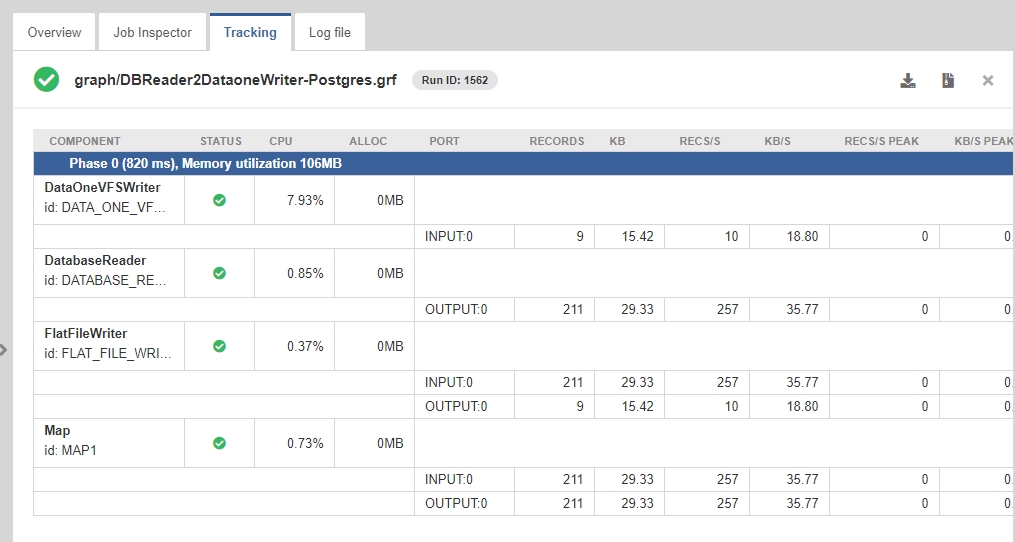

Tracking

The Tracking tab, contains details about the selected job:

Component

The name and the ID of the component.

Status

Status of data processing in the respective component. - FINISHED_OK: data was successfully processed - ABORTED: data processing has been aborted - N_A: status unknown - ERROR: an error occurred while data was processed by the component

CPU

CPU usage of the component.

Alloc

The cumulative number of bytes on heap allocated by the component during its lifetime.

Port

Component’s ports (both input and output) that were used for data transfer.

Records

The number of records transferred through the port of the component.

kB

Amount of data transferred in kB.

Records/s

The number of records processed per second.

KB/s

Data transfer speed in KB.

Records/s peak

The peak value of Records/s.

KB/s peak

The peak value of KB/s.

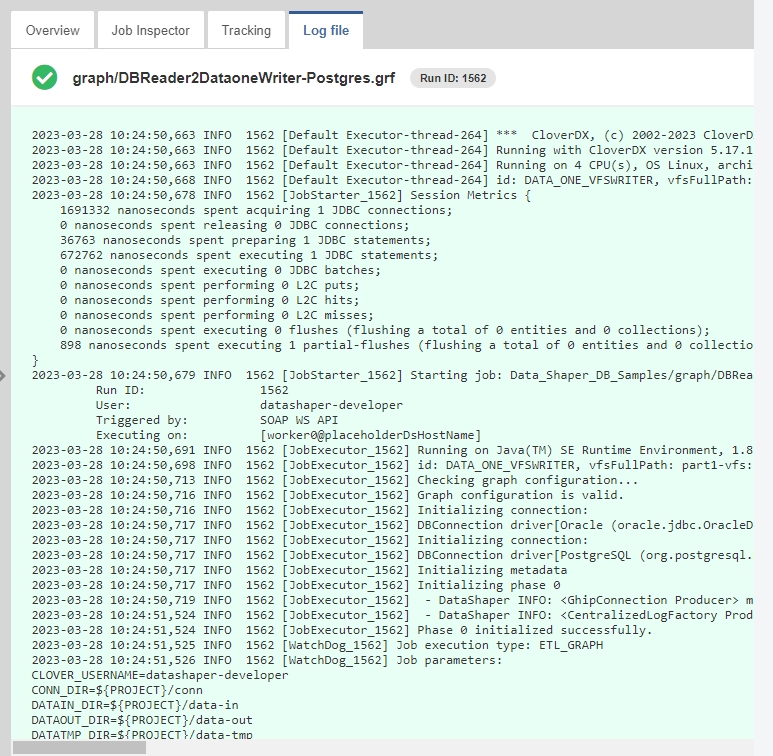

Log File

In the Log file tab, you can see the log of the job run with detailed information. A log with a green background indicates a successfully run job, while a red background indicates an error.

You can download the log as a plain text file by clicking Download log or as a zip archive by clicking Download log (zipped).

Last updated