Hadoop Connections

Hadoop connection enables Data Shaper to interact with the Hadoop distributed file system (HDFS), and to run MapReduce jobs on a Hadoop cluster. Hadoop connections can be created as both internal and external. See sections Creating Internal Database Connections and Creating External (Shared) Database Connections to learn how to create them. The definition process for Hadoop connections is very similar to other connections in Data Shaper, just select Create Hadoop connection instead of Create DB connection.

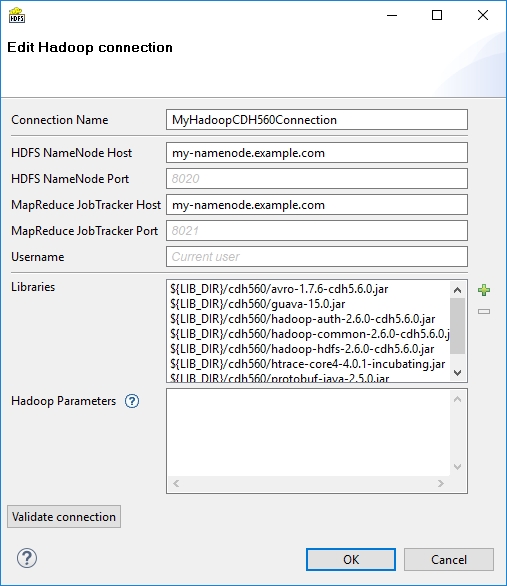

From the Hadoop connection properties, Connection Name and HDFS NameNode Host are mandatory. Also Libraries are almost always required.

Connection Name In this field, type in a name you want for this Hadoop connection. Note that if you are creating a new connection, the connection name you enter here will be used to generate an ID of the connection. Whereas the connection name is just an informational label, the connection ID is used to reference this connection from various graph components (e.g. in a file URL, as noted in Reading of Remote Files). Once the connection is created, the ID cannot be changed using this dialog to avoid accidental breaking of references (if you want to change the ID of already created connection, you can do so in the Properties view).

HDFS NameNode Host & Port Specify a hostname or IP address of your HDFS NameNode into the HDFS NameNode Host field. If you leave the HDFS NameNode Port field empty, the default port number 8020 will be used.

MapReduce JobTracker Host & Port Specify a hostname or IP address of your JobTracker into the MapReduce JobTracker Host field. This field is optional. If you don’t fill in the MapReduce JobTracker Port field, the default port number 8021 will be used.

Username The name of the user under which you want to perform file operations on the HDFS and execute MapReduce jobs.

HDFS works in a similar way as usual Unix file systems (file ownership, access permissions). But unless your Hadoop cluster has Kerberos security enabled, these names serve rather as labels and avoidance for accidental data loss.

However, MapReduce jobs cannot be easily executed as a user other than the one which runs a Data Shaper graph. If you need to execute MapReduce jobs, leave this field empty.

The default Username is an OS account name under which a Data Shaper transformation graph runs. So it can be, for instance, your Windows login. Linux running the HDFS NameNode doesn’t need to have a user with the same name defined at all.

Libraries Here you have to specify paths to Hadoop libraries needed to communicate with your Hadoop NameNode server and (optionally) the JobTracker server. Since there are some incompatible versions of Hadoop, you have to pick one that matches the version of your Hadoop cluster. For detailed overview of required Hadoop libraries, see Libraries Needed for Hadoop.

For example, the screenshot above depicts libraries needed to use Cloudera 5.6 of Hadoop distribution. The libraries are available for download from Cloudera’s web site.

The paths to the libraries can be absolute or project relative. Graph parameters can be used as well.

Troubleshooting

If you omit some required library, you will typically end up with java.lang.NoClassDefFoundError.

If an attempt is made to connect to a Hadoop server of one version using libraries of different version, an error usually appear, e.g.: org.apache.hadoop.ipc.RemoteException: Server IPC version 7 cannot communicate with client version 4.

Hadoop Parameters

In this simple text field, specify various parameters to fine-tune HDFS operations. Usually, leaving this field empty is just fine. See the list of available properties with default values in the documentation of core-default.xml and hdfs-default.xml files for your version of Hadoop. Only some of the properties listed there have an effect on Hadoop clients, most are exclusively server-side configuration.

Text entered here has to take the format of standard Java properties file. Hover mouse pointer above the question mark icon for a hint.

Once the Hadoop connection is set up, click the Validate connection button to quickly see that the parameters you entered can be used to successfully establish a connection to your Hadoop HDFS NameNode. Note that connection validation is not available if the libraries are located in a (remote) Data Shaper Server sandbox.

Connecting to YARN

If you run YARN (aka MapReduce 2.0, or MRv2) instead of the first generation of MapReduce framework on your Hadoop cluster, the following steps are required to configure the Data Shaper Hadoop connection:

Write an arbitrary value into the MapReduce JobTracker Host field. This value will not be used, but will ensure that MapReduce job execution is enabled for this Hadoop connection.

Add this key-value pair to Hadoop Parameters:

mapreduce.framework.name=yarnIn the Hadoop Parameters, add the key

yarn.resourcemanager.addresswith a value in form of a colon separated hostname and port of your YARN ResourceManager, e.g.yarn.resourcemanager.address=my-resourcemanager.example.com:8032.

You will probably have to specify the yarn.application.classpath parameter too, if the default value from yarn-default.xml isn’t working. In this case, you would probably find some java.lang.NoClassDefFoundError in the log of the failed YARN application container.

Libraries Needed for Hadoop

Hadoop components need to have Hadoop libraries accessible from Data Shaper. The libraries are needed by HDFS and Hive.

The Hadoop libraries are necessary to establish a Hadoop connection, see Hadoop Connections.

The officially supported version of Hadoop is Cloudera 5 version 5.6.0. Other versions close to this one might work, but the compatibility is not guaranteed.

Cloudera 5

The below mentioned libraries are needed for the connection to Cloudera 5.

Common libraries

hadoop-common-2.6.0-cdh5.6.0.jarhadoop-auth-2.6.0-cdh5.6.0.jarguava-15.0.jaravro-1.7.6-cdh5.6.0.jarhtrace-core4-4.0.1-incubating.jarservlet-api-3.0.jar

HDFS

hadoop-hdfs-2.6.0-cdh5.6.0.jarprotobuf-java-2.5.0.jar

MapReduce

hadoop-annotations-2.6.0-cdh5.6.0.jarhadoop-mapreduce-client-app-2.6.0-cdh5.6.0.jarhadoop-mapreduce-client-common-2.6.0-cdh5.6.0.jarhadoop-mapreduce-client-core-2.6.0-cdh5.6.0.jarhadoop-mapreduce-client-hs-2.6.0-cdh5.6.0.jarhadoop-mapreduce-client-jobclient-2.6.0-cdh5.6.0.jarhadoop-mapreduce-client-shuffle-2.6.0-cdh5.6.0.jarjackson-core-asl-1.9.2.jarjackson-mapper-asl-1.9.12.jarhadoop-yarn-api-2.6.0-cdh5.6.0.jarhadoop-yarn-client-2.6.0-cdh5.6.0.jarhadoop-yarn-common-2.6.0-cdh5.6.0.jar

Hive

hive-jdbc-1.1.0-cdh5.6.0.jarhive-exec-1.1.0-cdh5.6.0.jarhive-metastore-1.1.0-cdh5.6.0.jarhive-service-1.1.0-cdh5.6.0.jarlibfb303-0.9.2.jarslf4j-api-1.7.5.jarslf4j-log4j12-1.7.5.jar

The libraries can be found in your CDH installation or in a package downloaded from Cloudera.

CDH installation

Required libraries from CDH reside in the directories from the following list.

/usr/lib/hadoop/usr/lib/hadoop-hdfs/usr/lib/hadoop-mapreduce/usr/lib/hadoop-yarn+ 3rd party libraries are located in lib subdirectories

Package downloaded from Cloudera

The files can be found also in a package downloaded from Cloudera on the following locations.

share/hadoop/commonshare/hadoop/hdfsshare/hadoop/mapreduce2share/hadoop/yarn+ lib subdirectories

Kerberos Authentication for Hadoop

For user authentication in Hadoop, Data Shaper can use the Kerberos authentication protocol.

To use Kerberos, you have to set up your Java, project and HDFS connection. For more information, see Kerberos requirements and setting.

Note that the following instructions are applicable for Tomcat application server and Unix-like systems.

Java Setting

There are several ways of setting Java for Kerberos. In the case of the first two options (configuration via system properties and via configuration file), you must modify both setenv.sh in Data Shaper Server and DataShaperDesigner.ini in Data Shaper Designer.

Additionally, add the parameters in Data Shaper Designer to Window > Preferences > Data Shaper Runtime > VM parameters pane.

Configuration via system properties - Set the Java system property

java.security.krb5.realmto the name of your Kerberos realm, for example:-Djava.security.krb5.realm=EXAMPLE.COMSet the Java system propertyjava.security.krb5.kdcto the hostname of your Kerberos key distribution center, for example:-Djava.security.krb5.kdc=kerberos.example.comConfiguration via config file - Set the Java system property

java.security.krb5.confto point to the location of your Kerberos configuration file, for example:-Djava.security.krb5.conf="/path/to/krb5.conf"Configuration via config file in Java installation directory - Put the

krb5.conffile into the%JAVA_HOME%/lib/security directory, e.g. /opt/jdk1.8.0_144/jre/lib/security/krb5.conf.

Project Setting

Copy the

.keytabfile into the project, e.g.conn/clover.keytab.

Connection Setting

HDFS and MapReduce Connection

Set Username to the principal name, e.g.

clover/[email protected].Set the following parameters in the Hadoop Parameters pane:

cloveretl.hadoop.kerberos.keytab=${CONN_DIR}/clover.keytab

hadoop.security.authentication=Kerberos

yarn.resourcemanager.principal=yarn/[email protected]mapreduce.app-submission.cross-platform\=true

yarn.application.classpath\=\:$HADOOP_CONF_DIR, $HADOOP_COMMON_HOME/*, $HADOOP_COMMON_HOME/lib/*, $HADOOP_HDFS_HOME/*, $HADOOP_HDFS_HOME/lib/*, $HADOOP_MAPRED_HOME/*, $HADOOP_MAPRED_HOME/lib/*, $HADOOP_YARN_HOME/*, $HADOOP_YARN_HOME/lib/*\:

yarn.app.mapreduce.am.resource.mb\=512

mapreduce.map.memory.mb\=512

mapreduce.reduce.memory.mb\=512

mapreduce.framework.name\=yarn

yarn.log.aggregation-enable\=true

mapreduce.jobhistory.address\=example.com\:port

yarn.resourcemanager.ha.enabled\=true

yarn.resourcemanager.ha.rm-ids\=rm1,rm2

yarn.resourcemanager.hostname.rm1\=example.com

yarn.resourcemanager.hostname.rm2\=example.com

yarn.resourcemanager.scheduler.address.rm1\=example.com\:port

yarn.resourcemanager.scheduler.address.rm2\=example.com\:port

fs.permissions.umask-mode\=000

fs.defaultFS\=hdfs\://nameservice1

fs.default.name\=hdfs\://nameservice1

fs.nameservices\=nameservice1

fs.ha.namenodes.nameservice1\=namenode1,namenode2

fs.namenode.rpc-address.nameservice1.namenode1\=example.com\:port

fs.namenode.rpc-address.nameservice1.namenode2\=example.com\:port

fs.client.failover.proxy.provider.nameservice1\=org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

type=HADOOP

host=nameservice1

username=clover/[email protected]

hostMapred=Not needed for YARNIf you encounter an error:

No common protection layer between client and serverset thehadoop.rpc.protectionparameter to match your Hadoop cluster configuration.

Hive Connection

Add

;principal=hive/_[email protected]to the URL, e.g.jdbc:hive2://hive.example.com:10000/default;principal=hive/_[email protected]Set User to the principal name, e.g.

clover/[email protected]Set

cloveretl.hadoop.kerberos.keytab=${CONN_DIR}/clover.keytabin Advanced JDBC properties.

Last updated